- Home

- About Us

- Students

- Academics

-

Faculty

- Electrical Engineering

- Automation

- Computer Science & Engineering

- Electronic Engineering

- Instrument Science and Engineering

- Micro-Nano Electronics

- School of Software

- Academy of Information Technology and Electrical Engineering

- School of Cyber Security

- Electrical and Electronic Experimental Teaching Center

- Center for Advanced Electronic Materials and Devices

- Cooperative Medianet Innovation Center

- Alumni

-

Positions

-

Forum

News

- · Shanghai Jiao Tong University professors Lian Yong and Wang Guoxing's team have made remarkable progress in the field of high-efficiency pulse neural network accelerator chips.

- · AI + Urban Science research by AI Institute was selected as cover story in Nature Computational Science!

- · The first time in Asia! IPADS's Microkernel Operating System Research Wins the Best Paper Award at SOSP 2023

- · Delegation from the Institution of Engineering and Technology Visits the School of Electronic Information and Electrical Engineering for Journal Collaboration

- · Associate professor Liangjun Lu and research fellow Jiangbing Du from Shanghai Jiao Tong University made important advancements on large capacity and low power consumption data transmission

Exploring Frequency Adversarial Attacks for Face Forgery Detection (Hybrid Attack)

In recent years, we have witnessed the breakthrough progress achieved by artificial intelligence (AI), and with that comes the issue of the potential malicious abuse of AI technology. For example, current AI-based image synthesis technology can create ultra-realistic virtual characters, causing the widespread concern that "we get images does not necessarily mean we get trues." If criminals use synthetic faces to defraud, slander, and steal confidential information, it will cause a seriously negative impact on social security and stability.

The digital human research team led by Professor Xiaokang Yang from the AI Institute of Shanghai Jiao Tong University is committed to identifying the authenticity of face images and videos from the perspective of machine learning and developing trustable AI. They recently achieve large progress on face forgery detection, which aims to distinguish real faces from AI-synthesized ones. Particularly, two papers entitled "End-to-End Reconstruction-Classification Learning for Face Forgery Detection" and "Exploring Frequency Adversarial Attacks for Face Forgery Detection" have been accepted to the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2022, one of the top-tier international conferences on AI. The two papers, co-supervised by Professor Xiaokang Yang and Associate Professor Chao Ma, have achieved promising results in accuracy and robustness of face forgery detection, leading in creating “AI swordsman” for the applications of face authentication and virtual digital human so as to guard and then enhance human well-being.

Currently, there are two technical challenges in identifying whether a face image is forged or not. The first is how to detect a face image created by an unknown manipulation technique. Since current mainstream detection methods are designed for several specific face forgery techniques, they do not perform well when facing anonymous manipulation plots. The second is that face forgery essentially uses deep learning to tamper with the information of face images, and the current identification method does not consider the use of deep learning to attack the identification method itself. To this end, the research team proposed a face forgery detection method called RECCE. It adopts reconstruction learning to highlight the forgery traces, which makes the detector aware of even unknown forgery patterns. At the same time, the research team designed an adversarial attack on face forgery detection. It proposed a hybrid attack method, which successfully deceived detection algorithms by making invisible modifications to forged face images. The proposed adversarial attack method dramatically improves the robustness of current face forgery detection methods.

End-to-End Reconstruction-Classification Learning for Face Forgery Detection (RECCE)

Existing face forgery detection methods mainly focus on specific forgery patterns generated during the synthesis process to distinguish fake faces from real ones. For example, the Face X-Ray algorithm proposed by Microsoft Research Asia regards the blending boundary in synthetic faces as evidence of forgery. Their algorithm considers that each synthetic face image is composed of at least two images superimposed. That is, the middle of the face comes from one image, and the surrounding of the face comes from another image. However, with the development of forgery technology, over-focusing on specific known forgery patterns easily fail to identify forged samples generated by novel synthetic methods. Meanwhile, noises such as compression, blurring, and saturation misalignment in the image transmission process may also destroy the known forgery patterns, thereby affecting the accuracy of face forgery detectors.

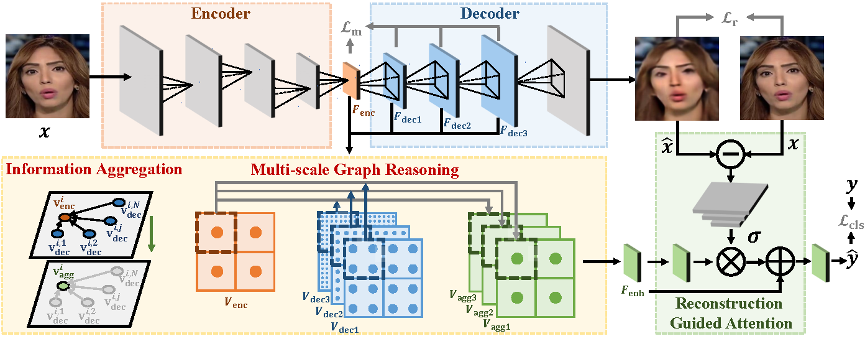

The research team explores the task of face forgery detection from a new perspective and designs a reconstruction-classification learning framework called RECCE. It learns the common features of real faces by reconstructing real face images and mines the essential difference between real faces and fake faces through classification learning. Briefly, a reconstruction network is trained with real face images, and the latent features of the reconstructed network are used to separate real and fake faces. Due to the distribution inconsistency between real and fake faces, the reconstruction difference of fake faces is more obvious and can indicate the probably forged regions.

The pipeline of the proposed reconstruction-classification learning framework (RECCE)

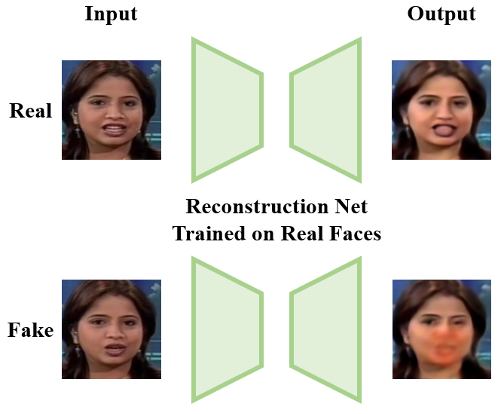

The figure above shows that the input includes a real face and a fake face with the forged mouth. The reconstruction method designed by the research team can effectively distinguish real and fake faces. It can accurately indicate the forged region (red mask on the mouth), providing better interpretability for intelligent detection techniques.

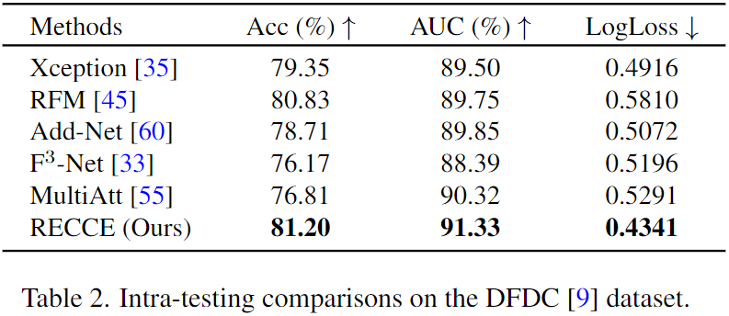

The research team conducts extensive experiments on commonly-used face forgery detection datasets like FaceForensics++ (FF++) and WildDeepfake. The results demonstrate that the proposed method achieved state-of-the-art performance in face forgery detection. Especially in the challenging F++ c40 (low-quality) setting, compared with the current state-of-the-art approach, F3-Net, the proposed method yields an AUC gain of 1.72% over it. To validate the effectiveness of the proposed method under complicated scenarios, the researchers further evaluate the proposed method on one of the largest datasets, DFDC. The experimental results are shown in the table below.

The table shows that the proposed method achieves the best performance and outperforms the second-best approach by 1.01% in AUC. These results further verify the superiority of the proposed method over existing approaches.

Exploring Frequency Adversarial Attacks for Face Forgery Detection (Hybrid Attack)

Facial manipulation is mainly to fool human beings, while adversarial attack aims to fool the well-trained face forgery detectors by injecting imperceptible perturbations on the pixels. It is of great importance to evaluate the robustness of face forgery detectors.

Existing attack methods mainly generate lightweight perturbations to deceive detectors into predicting wrongly for face forgery detection. For example, adding some adversarial perturbations to a fake face image can fool detectors to classify it as a real face. The research group from MIT proposes the PGD attack, which adds the back-propagation gradient of the network constantly into the original image and gets the final adversarial example. However, this method for face forgery detection is prone to overfit the specific network and yield a weak transferability. Besides, it also degrades the image quality of adversarial examples.

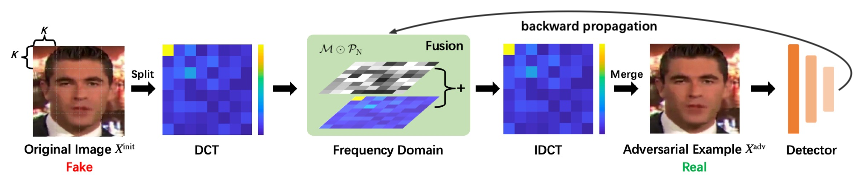

The research team led by Professor Xiaokang Yang observes that existing face forgery detectors always utilize the frequency diversity between real and fake faces as a crucial clue. Motivated by that, the researchers propose a frequency adversarial attack method against face forgery detectors. Concretely, they apply discrete cosine transform (DCT) on the input images and introduce the adversarial perturbations on the salient region of the adversary in the frequency domain. After that, they apply inverse DCT back to the spatial domain and obtain the final adversarial examples. Moreover, inspired by meta-learning, they also propose a hybrid adversarial attack that performs attacks in both the spatial and frequency domains. The attack method maintains the attack success rates as white-box attacks and enhances the transferability across unknown detectors as black-box attacks.

The pipeline of frequency adversarial attack.

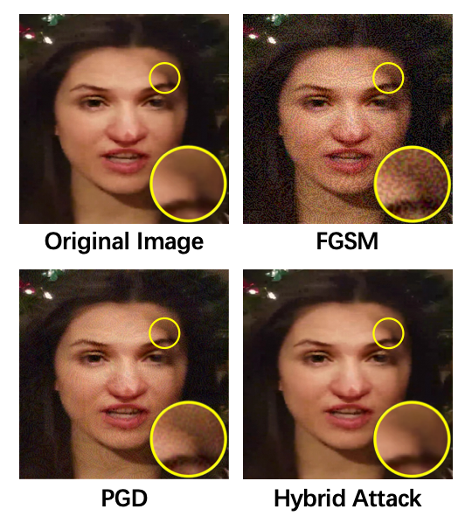

Illustration of adversarial examples generated by different methods. The proposed hybrid attack achieves superior image quality and yields a more aggressive attack.

Unlike the PGD attack proposed by MIT, the proposed method adds the adversary in the frequency domain, which is invisible to human beings. It does not degrade the visual quality of the face image. This attack method can fool both the state-of-the-art spatial-based and frequency-based detectors effectively. Extensive experiments indicate that the proposed attack method achieves state-of-the-art attack results on multiple standard benchmarks and provides adversarial examples for face forgery detection.

The above two papers are the cooperative achievement of the team led by Professor Xiaokang Yang from AI Institute of SJTU and Tencent Youtu Lab. The proposed methods can be applied to various scenarios, such as remote identity authentication and image or video forgery detection, which promise the security requirement for face identity authentication. Junyi Cao (graduate student) and Shuai Jia (Ph.D. student) from AI Institute of SJTU are the first authors of two papers, respectively. Associate professor Dr. Chao Ma from AI Institute of SJTU, is the corresponding author of two papers. Coauthors include Taiping Yao, Shen Chen, Bangjie Yin, and Dr. Shouheng Ding from Tencent Youtu Lab.

Since 2020, the technology for generating large-scale digital humans has been increasingly demanded. The digital human research team led by Professor Xiaokang Yang has developed generative digital human theories and technologies to solve challenging problems, including generalizability, drivability, interaction, and credible authentication of digital humans. It aims to improve the quality and efficiency of digital human generation and enhance the perception, interaction, and credible authentication of digital humans in the digital space. In the future, AI Institute will continue to follow the deployment of Shanghai Jiao Tong University to build an interdisciplinary research highland about AI, nurture the AI innovation ecosystem in SJTU.

-

Students

-

Faculty/Staff

-

Alumni

-

Vistors

-

Quick Links