- Home

- About Us

- Students

- Academics

-

Faculty

- Electrical Engineering

- Automation

- Computer Science & Engineering

- Electronic Engineering

- Instrument Science and Engineering

- Micro-Nano Electronics

- School of Software

- Academy of Information Technology and Electrical Engineering

- School of Cyber Security

- Electrical and Electronic Experimental Teaching Center

- Center for Advanced Electronic Materials and Devices

- Cooperative Medianet Innovation Center

- Alumni

-

Positions

-

Forum

News

- · Shanghai Jiao Tong University professors Lian Yong and Wang Guoxing's team have made remarkable progress in the field of high-efficiency pulse neural network accelerator chips.

- · AI + Urban Science research by AI Institute was selected as cover story in Nature Computational Science!

- · The first time in Asia! IPADS's Microkernel Operating System Research Wins the Best Paper Award at SOSP 2023

- · Delegation from the Institution of Engineering and Technology Visits the School of Electronic Information and Electrical Engineering for Journal Collaboration

- · Associate professor Liangjun Lu and research fellow Jiangbing Du from Shanghai Jiao Tong University made important advancements on large capacity and low power consumption data transmission

Professor Yanfeng Wang's group published foundation model on chest radiology images in Nature Communications

On July 19th, the research paper titled “Knowledge-enhanced visual-language pre-training on chest radiology Images” by the joint team from Shanghai Jiao Tong University and Shanghai AI Laboratory has been published in Nature Communications. This study focuses on medical artificial intelligence and proposes the first knowledge-enhanced foundation model for Chest X-ray called KAD (Knowledge-enhanced Auto Diagnosis Model).

Figure1:Knowledge-enhanced Visual-Language Pre-training on Chest Radiology Images

Foundation models have shown great promise in feature transfer and generalization to a wide spectrum of downstream tasks, for example, in natural language processing or computer vision. However, the development of foundation models in medical domains has been largely lagged behind, due to the requirement for fine-grained recognition in medical tasks and the difficulty of learning the complex and professional medical terminologies. Thus, to effectively model the intricate and specialized concepts of medical applications, domain knowledge is indispensable. By integrating medical knowledge into the models, it is expected to improve their performance and applicability in practical healthcare scenarios.

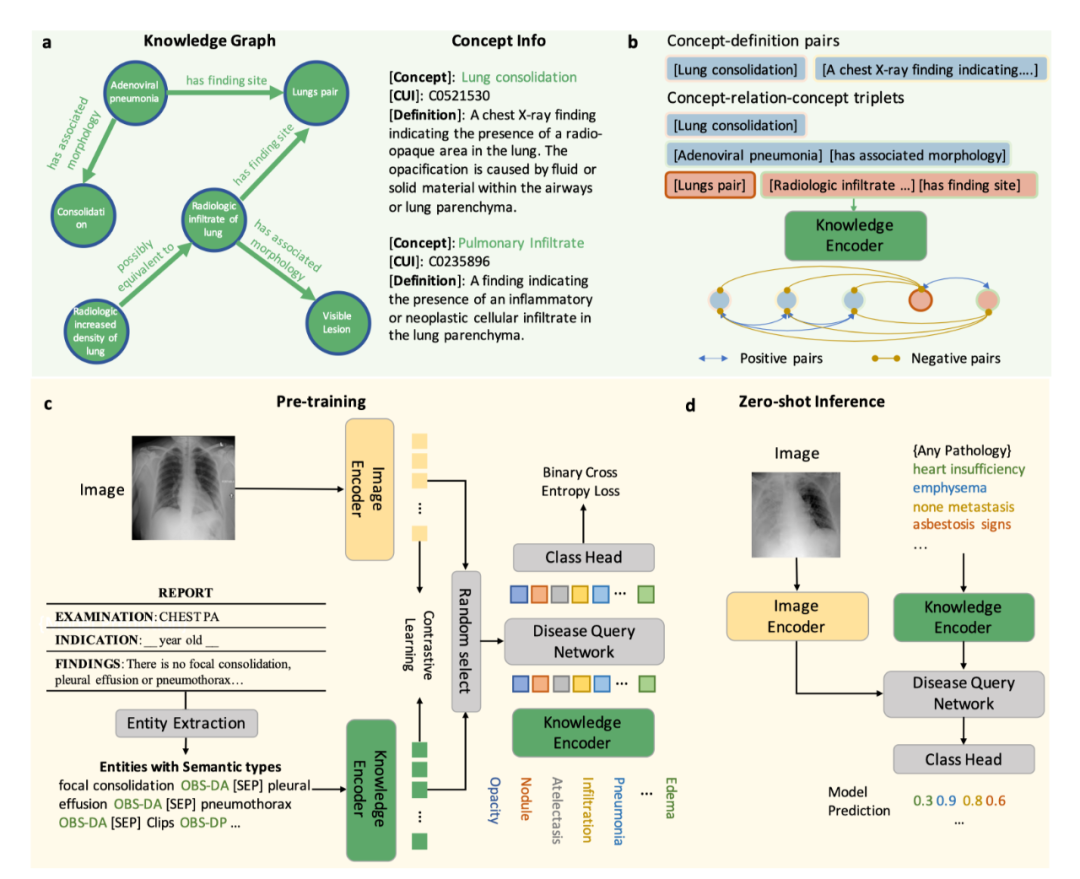

Figure 2:Overview of the KAD workflow

To address this challenge, the team from Shanghai Jiao Tong University and Shanghai AL Laboratory propose an approach called Knowledge-enhanced Auto Diagnosis (KAD) which leverages existing medical domain knowledge to guide vision-language pre-training using paired chest X-rays and radiology reports. It utilizes a text encoder to embed high-quality medical knowledge graphs into a latent space and further employs a visual-language joint training approach to achieve knowledge-enhanced representation learning. KAD enables flexible zero-shot evaluation on arbitrary diseases or radiology findings without requiring additional annotations. This work presents a promising idea for domain knowledge injection in developing foundation models for AI-assisted diagnosis in radiography.

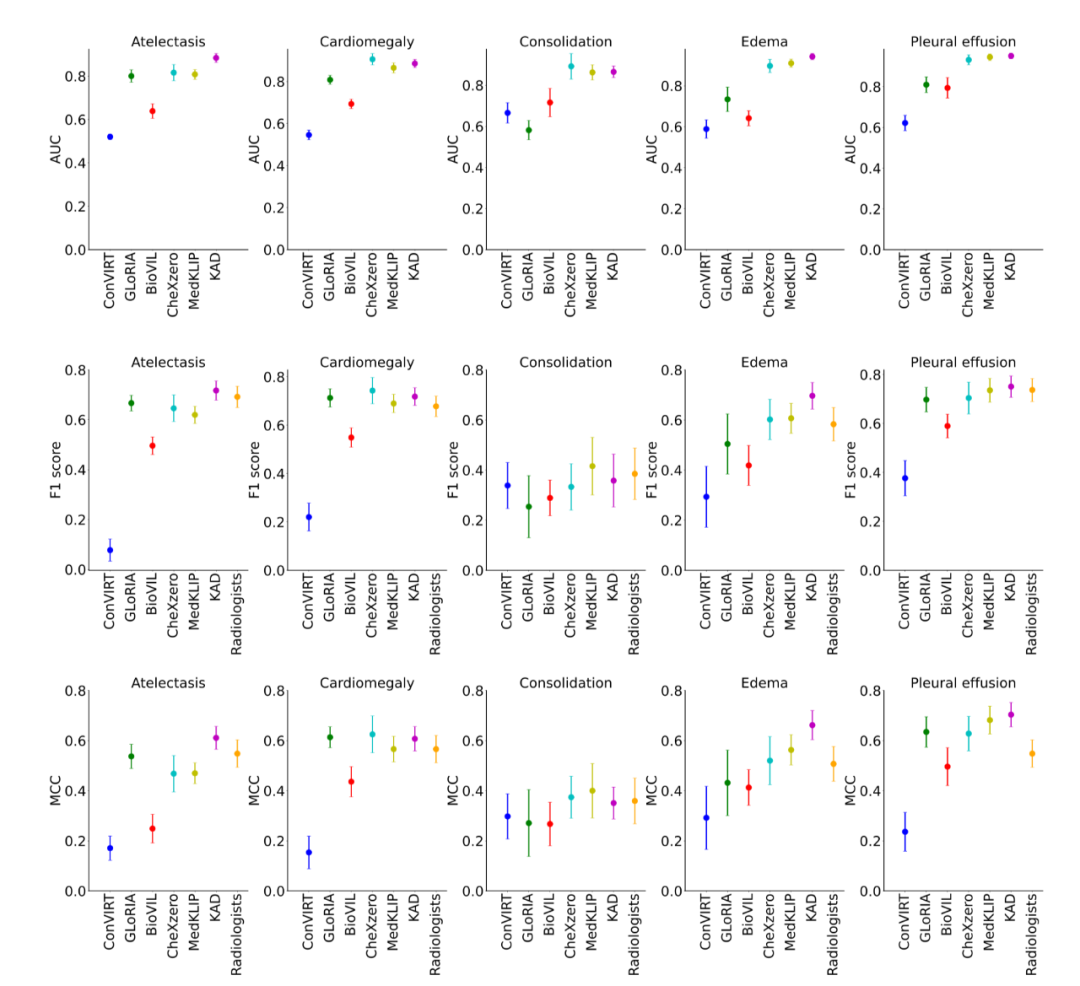

Figure 3:Comparisons of proposed KAD with SOTA models and three board-certified radiologists on five competition pathologies

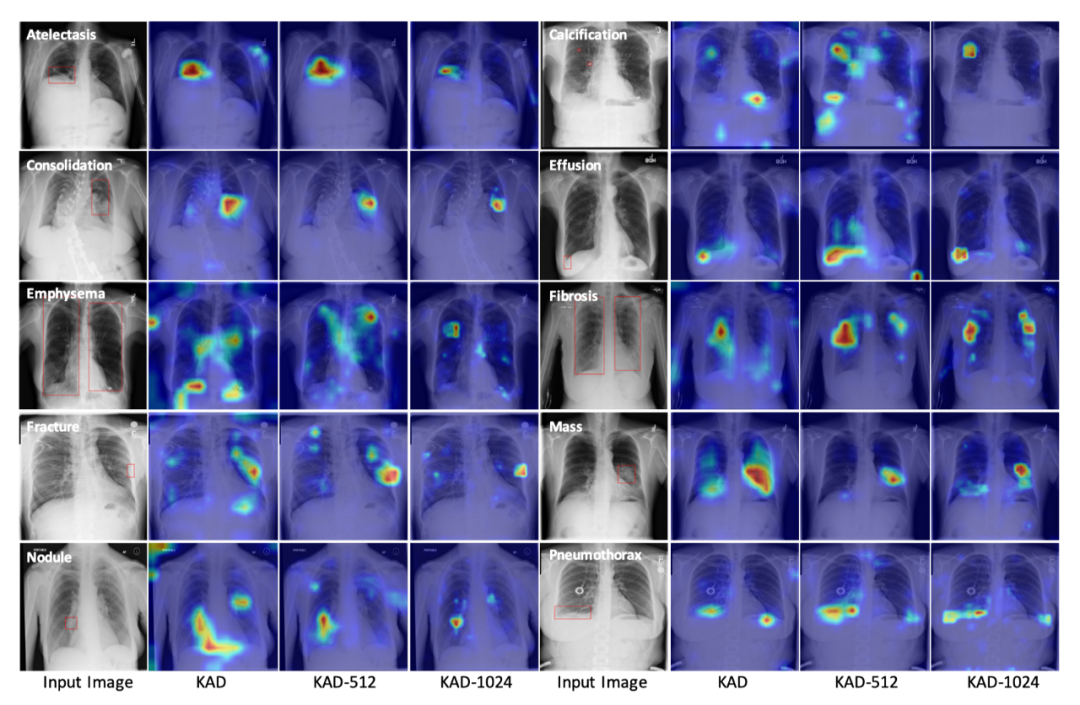

The specialized nature of the medical field has greatly restricted the practical application of general foundation models in real clinical diagnosis and treatment scenarios. KAD provides a viable solution for knowledge-enhanced pre-training of foundation models. Its training framework only requires imaging-report data and does not rely on manual annotations. In downstream chest X-ray diagnostic tasks, KAD substantially outperforms existing approaches, developed by Stanford, Microsoft, etc, even achieving comparable accuracy to that of professional radiologists without the need for any supervised fine-tuning. It supports open-set disease diagnosis tasks while providing lesion localization in the form of attention maps, thereby enhancing the model's interpretability. It is worth noting that the knowledge-enhanced representation learning approach proposed in this research is not limited to chest X-rays and can be widely applied to different organs and modalities in the medical field, which holds significant importance in promoting the practical application of medical foundational models in clinical settings.

Figure 4: Visualization of zero-shot disease grounding

The first author of the paper is Xiaoman Zhang, a PhD student from the Cooperative Medianet Innovation Center, SEIEE,Shanghai Jiao Tong University. The corresponding authors are Professor Yanfeng Wang and Professor Weidi Xie from the research group. Professor Yanfeng Wang 's team has been engaged in research on artificial intelligence algorithms and their applications in media and medical fields. They have published numerous papers in internationally renowned journals and conferences, such as TPAMI, TIP, CVPR, ICML, etc. Their related research has received funding from National Key R&D Program of China, National Natural Science Foundation of China.

Paper Link:https://rdcu.be/dhWz0

-

Students

-

Faculty/Staff

-

Alumni

-

Vistors

-

Quick Links